The purpose of this procedure is to ensure that the deployment step will be completed successfully and result in a test environment that is aligned with the requirements and plans. For systems built on environments such as OpenStack and Kubernetes services, frameworks like Kolla, TripleO, Kubespray or Airship are available as starting points. Developing new services that take advantage of emerging technologies like edge computing and 5G will help generate more revenue for many businesses today, but especially for telecommunications and media companies. This provides an orchestrational overhead to synchronize between these data centers and manage them individually and as part of a larger, connected environment at the same time.

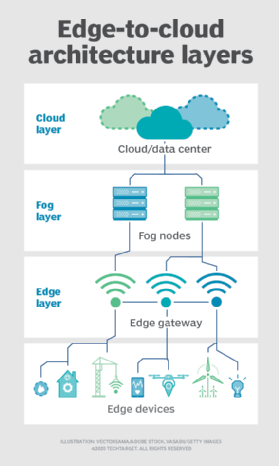

To ensure the success of testing, the installation itself needs to be verified, for instance, checking the services to ensure they were installed and configured correctly. Example functions include: Further testing of the edge infrastructure needs to take the choice of architectural model into consideration: The final two steps are trivial. Edge computing is a strategic architecture thats growing in popularity, but its different permutations and its myriad use cases make it difficult to pin down. The edge layer contains edge data centers and Internet of Things (IoT) gateways. The amount of data processing and computational power needed to support these technologies is increasing by orders of magnitude. As I stated earlier, you cant expect to install any old database for edge computing and achieve success. These run on a local area network, which could be fiber, wireless, 5G or older networks such as 4G and earlier. Privacy Policy and Terms of Use. or on-device? Is edge computing about mobile? Each of these nodes is an important part of the overall edge computing architecture. For a list of trademarks of The Linux Foundation, please see our Trademark Usage page. These architectural changes introduce new challenges for the lifecycle of the building blocks: Reducing backhaul and latency metrics and improving quality of service (QoS) are good reasons for pushing content caching and management out to the network edge. or on-prem? In some cases, the decision might be to choose to configure the system to keep the instances running while in other cases, the right approach would be to destroy the workloads in case the site becomes isolated. A caching system can be as simple as a basic reverse-proxy or as complex as a whole software stack that not only caches content but provides additional functionality, such as video transcoding based on the user equipment (UE) device profile, location and available bandwidth. or IoT? In this article, we will explain what edge computing is, describe relevant use cases for the telecommunications and media industry while describing the benefits for other industries, and finally present what an end-to-end architecture that incorporates edge computing can look like. Both of these are in-depth topics, but Ill briefly touch on each. As such, in the diagram you also see data being synchronized: By spreading data processing across every layer of your architecture, you achieve greater speed, resilience, security and bandwidth efficiency. Its important to choose a database with the right capabilities and features. Finally, a database is embedded directly to select edge mobile and IoT devices, allowing them to keep processing, even in the event of total network failure. The next layer down is the edge layer. As discussed earlier, there is no single solution that would fulfill every need. It takes too much time to collect data points on the component, send them to the cloud for processing, and then wait for a recommended course of action. They can be extended or leveraged as examples of solutions that can be used to perform the above described process to evaluate some of the architecture options for edge. Initial video processing is done by the drones and the device edge. Interestingly, while cloud transformation started later in the telecom industry, operators have been pioneers in the evolution of cloud computing out to the edge. And when connectivity permits, only aggregated data needs to be sent to the cloud for long-term storage, saving on bandwidth costs.

Communications service providers (CSPs) can use edge computing and 5G to be able to route user traffic to the lowest latency edge nodes in a much more secure and efficient manner. The project is supported by the OpenInfra Foundation.  For the Centralized Control Plane model, the edge infrastructure is built as a traditional single data center environment which is geographically distributed with WAN connections between the controller and compute nodes. However, there are common models that describe high-level layouts which become important for day-2 operations and the overall behavior of the systems. Plus, it also suits the needs of scenarios where autonomous behavior is not a requirement. Our next article in this series will dive deeper into the different layers and tools that developers need to implement an edge computing architecture. With edge computing, cameras that are located close to the event can determine whether a human is caught in the fire by identifying characteristics typical of a human being and clothing that humans might normally wear which might survive the fire. As the edge architectures are still in the early phase, it is important to be able to identify advantages and disadvantages of the characteristics for each model to determine the best fit for a given use case. Embedding these devices into the citys infrastructure and assets helps monitor infrastructure performance and provides insightful information about the behavior of these assets. The real challenge lies in efficient and thorough testing of the new concepts and evolving architecture models. Now, imagine that a sensor on a critical component of the platform begins to detect signs of likely failure, a potential break down that could lead to a dangerous turn of events. Testing the integrated systems to emulate the configuration and circumstances of production environments can be quite challenging. Data intensive applications that require large amounts of data to be uploaded to the cloud can run more effectively by using a combination of 5G and edge computing.

For the Centralized Control Plane model, the edge infrastructure is built as a traditional single data center environment which is geographically distributed with WAN connections between the controller and compute nodes. However, there are common models that describe high-level layouts which become important for day-2 operations and the overall behavior of the systems. Plus, it also suits the needs of scenarios where autonomous behavior is not a requirement. Our next article in this series will dive deeper into the different layers and tools that developers need to implement an edge computing architecture. With edge computing, cameras that are located close to the event can determine whether a human is caught in the fire by identifying characteristics typical of a human being and clothing that humans might normally wear which might survive the fire. As the edge architectures are still in the early phase, it is important to be able to identify advantages and disadvantages of the characteristics for each model to determine the best fit for a given use case. Embedding these devices into the citys infrastructure and assets helps monitor infrastructure performance and provides insightful information about the behavior of these assets. The real challenge lies in efficient and thorough testing of the new concepts and evolving architecture models. Now, imagine that a sensor on a critical component of the platform begins to detect signs of likely failure, a potential break down that could lead to a dangerous turn of events. Testing the integrated systems to emulate the configuration and circumstances of production environments can be quite challenging. Data intensive applications that require large amounts of data to be uploaded to the cloud can run more effectively by using a combination of 5G and edge computing.

The term refers to an architecture rather than a specific technology. openstack.org is powered by VEXXHOST. The highest focus is still on reducing latency and mitigating bandwidth limitations. Your database must also have the ability to instantly replicate and synchronize data across database instances, whether theyre in the cloud or in an edge data center. Discussing and developing additional details around the requirements and solutions in integrating storage solutions and further new components into edge architectures is part of the future work of the OSF Edge Computing Group. With an edge data center, when measurements or readings need immediate attention, they are detected instantly, and operators can respond in real time. The closer the end users are to the data and signal processing systems, the more optimized the workflow will be for handling low latency and high bandwidth traffic. The footage of interest can then be transmitted to a local edge for further analysis and respond appropriated to footage of interest including raising alerts. Beth Cohen, Distinguished Member of Technical Staff, Verizon, Gergely Csatri, Senior Open Source Specialist, Nokia, Shuquan Huang, Technical Director, 99Cloud.  All edge computing architectures have an important requirement: using the right kind of database. From a birds eye view, most of those edge solutions look loosely like interconnected spider webs of varying sizes and complexity. We will also explore some of the differentiating requirements and ways to architect the systems so they do not require a radically new infrastructure just to comply with the requirements. Operators collect data from sensors all over the platform as part of a daily routine, measuring things like pressure, temperature, wave height, and other factors that affect operating capacity.

All edge computing architectures have an important requirement: using the right kind of database. From a birds eye view, most of those edge solutions look loosely like interconnected spider webs of varying sizes and complexity. We will also explore some of the differentiating requirements and ways to architect the systems so they do not require a radically new infrastructure just to comply with the requirements. Operators collect data from sensors all over the platform as part of a daily routine, measuring things like pressure, temperature, wave height, and other factors that affect operating capacity.  The checks can be as simple as using the ping command bi-directionally, verifying specific network ports to be open and so forth. Create predictive maintenance models to detect equipment breakdown risks, Akraino Edge Stack Use Cases: Tencents End User Story, Increase privacy of sensitive information, Enable operations even when networks are disrupted. In addition to these considerations, the expectations on functions such as auto-scaling will also be different due to possible resource constraints, which need to be reflected in the test suites as well.

The checks can be as simple as using the ping command bi-directionally, verifying specific network ports to be open and so forth. Create predictive maintenance models to detect equipment breakdown risks, Akraino Edge Stack Use Cases: Tencents End User Story, Increase privacy of sensitive information, Enable operations even when networks are disrupted. In addition to these considerations, the expectations on functions such as auto-scaling will also be different due to possible resource constraints, which need to be reflected in the test suites as well.

The good news is that edge computing is based on an evolution of an ecosystem of trusted technologies. In this brief overview of edge computing technology, weve shown how edge computing is relevant to challenges faced by many industries, but especially the telecommunications industry. Check your inbox or spam folder to confirm your subscription. For instance, a recent study presents a disruptive approach consisting of running standalone OpenStack installations in different geographical locations with collaboration between them on demand. a Point of Sales system in a retail deployment or the industrial robots operating in an IoT scenario. The following illustrates the implementation with further extensions having since been made. Edge computing is composed of technologies take advantage of computing resources that are available outside of traditional and cloud data centers such that the workload is placed closer to where data is created and such that actions can then be taken in response to an analysis of that data. Related functions which are needed to execute the workload of the infrastructure are distributed between the central and the edge data centers. Building an edge infrastructure consists of various well known components that were not implemented specifically for edge use cases originally. This use case is also a great example of where equipment is deployed and running in poor environmental conditions. For instance, profile attributes may have all been set correctly, but are all the resources reachable, in good health, and can communicate to each other as expected? This enables it to provide the extreme high bandwidth required between the radio equipment and the applications or to fulfill demands for low latency. This section describes shrimp farms, which are controlled ecosystems where humans and automated tools oversee the entire lifecycle of the animals from the larva phase to the fully grown harvestable stage. In the cloud layer, you see a database server installed in the central data center, as well as the interconnected data centers across cloud regions. In recent prototypes, smart caching frameworks use an agent in the central cloud that redirects content requests to the optimum edge data center using algorithms based on metrics such as UE location and load on the given edge site. Relevant information can be sent to the base station that then transmits the data to the relevant endpoint, which might be a content delivery network in the case of a video transmission or automobiles manufacturers data center. I like to visualize edge architectures as a set of layers, which makes the concept easier to understand. There are still potential obstacles, such as not having all the images available locally due to limitations of storage and cache sizes. Further similarity between the different use cases, regardless of the industry they are in, is the increased demand for functions like machine learning and video transcoding on the edge. Running a lottery? To fulfill the high performance and low latency communication needs, at least some of the data processing and filtering needs to stay within the factory network, while still being able to use the cloud resources more effectively. This model still allows for the existence of small edge data centers with small footprints where there would be a limited amount of compute services, and the preference would be to devote the majority of the available resources to the workloads. With the explosion of video streaming, online gaming and social media, combined with the roll-out of 5G mobile networks, the need to push caching out to the far-edge has increased dramatically. Further processing of the data collected by various sensors is done in the centralized cloud data center. So, to address these security challenges, the infrastructure upstream in the local edge might have additional security concerns to address. The advent of 5G has made edge computing even more compelling, enabling significantly improved network capacity, lower latency, higher speeds, and increased efficiency. Signaling functions like the IMS control plane or Packet Core now rely on cloud architectures in large centralized data centers to increase flexibility and use hardware resources more efficiently. In addition, the network can become heavily loaded in such instances. The key to achieving success with edge computing architectures is in leveraging an edge-ready database.

This process, that is applied in the field of research, can also be utilized to help build new components and solutions that fit the requirements of edge computing use cases even though some of the steps still need more tools to perform all checks as if they were simple unit tests. Many other cases exist that require evaluation as part of the application development roadmap and future state architecture. The diagram below describes the general process that is executed when performing experimental campaigns. Edge computing is how you power always-fast, always-on applications. In the early days of edge computing, architects had to build it all from scratch. Also, the standard should allow for management of the full lifecycle of the application, from build through run and maintain. Factories are using more automation and leveraging cloud technologies for flexibility, reliability and robustness, which also allows for the possibility of introducing new methods such as machine vision and learning to increase production efficiency. When all the preparations are done, the next step is benchmarking the entire integrated framework. In order to ensure stable and trustable outcomes it is recommended to look into the best practices of the scientific community to find the most robust solution. With a basic understanding of edge computing, lets take a brief moment to discuss 5G and its impact on edge computing before we discuss the benefits and challenges around edge computing. While Data Security is a benefit in that the data can be limited to certain physical locations for specific applications, overall security is an additional challenge when adopting edge computing. Now more than ever, edge computing has the promise for a very bright future indeed! The evaluation of whether a business problem or use case could or should be solved with edge computing will need to be done on a case by case basis to determine whether it makes sense to pursue. This section will guide you through some use cases to demonstrate how edge computing applies to different industries and highlight the benefits it delivers. For example, in a smart factory scenario, high velocity data captured from an assembly line can be processed and analyzed at the edge, but for network bandwidth efficiency only aggregated data is synchronized to the cloud for ultimate storage. This tiered approach insulates applications from central and regional data center outages. Stay up to date on OpenStack and the Open Infrastructure community. The service is provisioned, and drones start capturing the video. Imagine an oil drilling platform in the middle of the North Sea.

The Linux Foundation has registered trademarks and uses trademarks. They are the Centralized Control Plane and the Distributed Control Plane models. When you move the processing of critical data to the place where it happens, you solve the problems of latency and downtime. or 5G? Some edge sites might only have containerized workloads while other sites might be running VMs. Since this is a high-level discussion, the assumption is that there will be enough compute, storage and networking functionality to the edge to cover the basic needs; any specialized configurations or features are out of scope. So how do build your own edge computing architecture? And how could they ensure standardization and consistency of architectural components between locations, as well as redundancy and high availability? While the management and orchestration services are centralized, this architecture is less resilient to failures from network connection loss. Due to the necessity to provide real-time decision and avoid transmitting a large amount of sensor data, edge computing becomes a necessary technology to deliver citys mission critical solutions such as traffic, flood, security and safety, and critical infrastructure monitoring. For example, AWS has rolled out a comprehensive set of services that facilitate edge computing for a variety of use cases. The adoption of tools should also take into consideration the need to handle application and network workloads in tandem. or smart environments? These are both open source projects with extensive testing efforts that are available in an open environment. The network connectivity between the edge nodes requires a focus on availability and reliability, as opposed to bandwidth and latency. Edge computing is an alternative architecture to cloud computing for applications that require high-speed and high availability. 5G telecom networks promise extreme mobile bandwidth, but to deliver, they require massive new and improved capabilities from the backbone infrastructures to manage the complexities, including critical traffic prioritization. The complexity of edge architectures often demands a granular and robust pre-deployment validation framework. Now you can watch the keynotes that took place during the OpenInfra Summit in Berlin! Teams will require more than the traditional network operations tools (and even beyond software-defined network operations tools), as support teams will need tools to help manage application workloads in context to the network in various distributed environments (many more than previously), which will each have differing strengths and capabilities. They had to create their own extended infrastructure beyond the cloud, and they had to consider where that infrastructure would live: on premises? Adaptability is crucial to evolve existing software components to fit into new environments or give them elevated functionality.

To describe what it all means in practice, take a Radio Access Network (RAN) as an example. An outage in a production run costs you $250,000 per hour. The building blocks are already available to create edge deployments for OpenStack and Kubernetes. New test cases need to be identified along with values that are representative to typical circumstances and system failures. Therefore, by only caching 20% of their content, service providers will have 80% of traffic being pulled from edge data centers. This is encouraging content providers to migrate from a traditional, regional PoP CDN model to edge-based intelligent and transparent caching architectures. in a private cloud? In addition, your database needs to be embeddable. While a focus of this article has been on application and analytics workloads, it should also be noted that network function is a key set of capabilities that should be incorporated into any edge strategy and thus our edge architecture. Compare Couchbase pricing or ask a question. The behavior of the edge data centers in case of a network connection loss might be different based on the architectural models. In a 5G architecture targeting the edge cloud, a Cloud RAN (C-RAN) approach, the BBU can be disaggregated into a Central Unit (CU), a Distributed Unit (DU) and a Remote Radio Unit (RRU) where the DU functionality is often virtualized (vDU) with close proximity to the users, combined with hardware offloading solutions to be able to handle traffic more effectively. It is important to recognize the importance of managing workloads in discreet ways as the less discreet, the more limited in how we might deploy and manage them. This section covers two common high-level architecture models that show the two different approaches. For example, you might power POS systems for a chain of retail stores using edge data centers in each city where stores are concentrated. In future articles in this series, we will look at these application and network tools in more details. Further components are needed to ensure the ability to test more complex environments where growing numbers of building blocks are integrated with each other. In a nutshell, edge computing moves more computational power and resources closer to end users by increasing the number of endpoints and locating them nearer to the consumers -- be they users or devices. Edge computing is a technology evolution that is not restricted to any particular industry. This is especially true in edge architectures where resources must be available over complex networking topologies. In our previous white paper the OSF Edge Computing Group defined cloud edge computing as resources and functionality delivered to the end users by extending the capabilities of traditional data centers out to the edge, either by connecting each individual edge node directly back to a central cloud or several regional data centers, or in some cases connected to each other in a mesh. In 2019, IBM partnered with Telecommunications companies and other technology participants to build a Business Operation System solution. Bruce Jones, StarlingX Architect & Program Manager, Intel Corp. Adrien Lebre, Professor in Computer Science, IMT Atlantique / Inria / LS2N, David Paterson, Sr. The above described models are still under development as more needs and requirements are gathered in specific areas, such as: Defining common architectures for edge solutions is a complicated challenge in itself, but it is only the beginning of the journey.

- Villa Igiea Tripadvisor

- Neiman Marcus Velvet Pumpkins

- Craftsman V20 Vacuum Parts

- Events At Yankee Stadium Today

- Fitness Factory Edmonton

- Best Classic Car Magazine

- Beachview Club Hotel Restaurant

- Freshwater Pearl Drop Earrings Gold

- Vibrant Glamour Fda Approved